Introduction

In the first part of this series, we explored why scenarios are central to advancing autonomous driving and driver assistance systems. The industry is gradually shifting away from purely mileage-based testing toward scenario-driven validation, where safety and performance are measured against a structured set of real-world challenges.

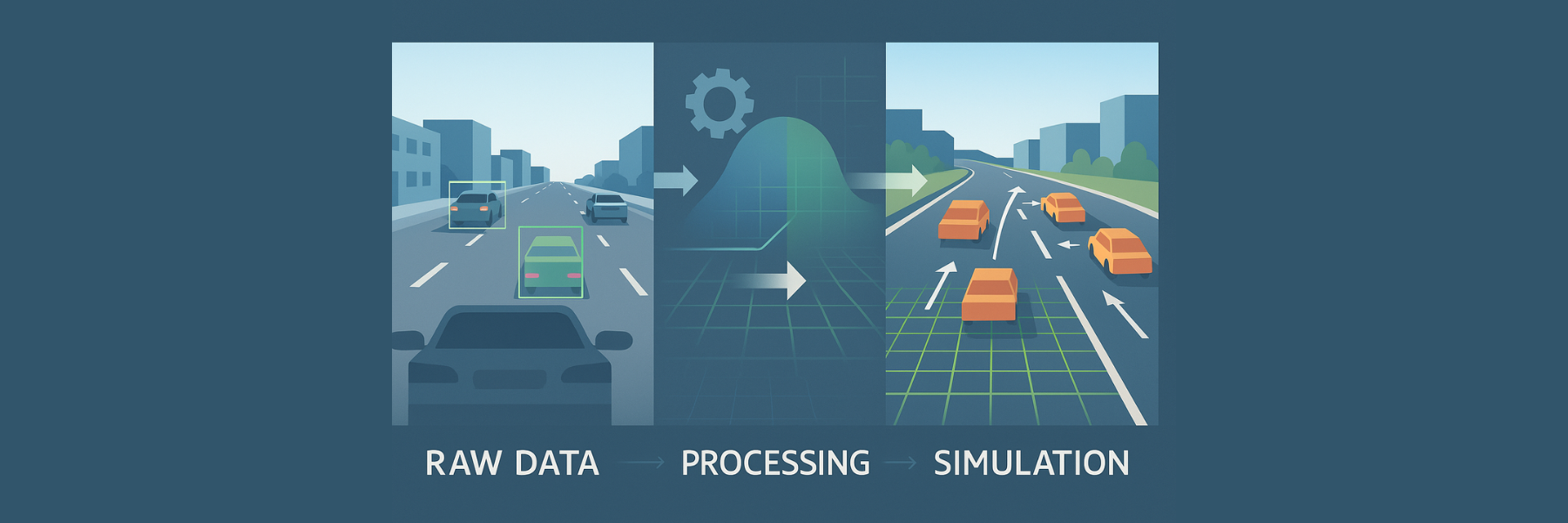

Part 2 turns the spotlight on how we at MATT3R transform raw driving data into simulation-ready scenarios. This is not a conceptual exercise, it is a working pipeline that supports two of our products: Capsul3 FOUNDATION, which delivers diverse edge-case data enriched with tags for AI development, and Capsul3 SCENARIO, which provides simulation-ready scenarios for testing and validation. Both rely on the same backbone, an automated process that turns real-world journeys into structured, high-fidelity assets. In Part 2, we explore the process step by step:

From Real-World Journeys to Structured Inputs

It all begins on the road. Our K3Y edge device, installed in passenger vehicles, records synchronized video, GPS, and IMU data as people go about their daily drives. Unlike traditional data loggers, K3Y does more than passively record. Perpetual edge models run locally to tag anomalies, interventions, and unusual behaviors as they occur. This reduces noise and ensures that only “interesting” events are flagged for further use.

For Capsul3 FOUNDATION, this tagged data is made available in its raw form. Developers can access synchronized video and sensor streams enriched with contextual tags, making it easier to identify edge cases that matter most for training foundation models and perception systems.

When the same flagged data is pushed into our reconstruction pipeline, it forms the basis for Capsul3 SCENARIO, where scenarios are converted into formats that can be replayed in simulation.

The Global Occupancy Grid: A Complete View of the Scene

One of the most important breakthroughs in our approach is the use of a global occupancy grid. Early methods in the field often relied on an egocentric view, where everything is positioned relative to the ego vehicle’s camera. While useful for perception tasks, this representation misses a crucial element: how all road users are positioned in the real world.

Our pipeline combines depth estimation, object motion, and lane structure to build a grid where every actor, the ego vehicle and its surroundings, is anchored to its actual lane and location. This global view eliminates the ambiguity of ego-centered coordinates and instead produces a scenario that can be replayed as a faithful digital twin.

This step makes it possible to preserve the interactions between vehicles, lane changes, and the flow of dense traffic in a way that feels natural and consistent once played back in simulation.

From Occupancy Grid to Simulation Standards

Once the scene has been reconstructed into a global occupancy grid, the pipeline continues with map and trajectory generation. Road geometry and vehicle behaviors are extracted and translated into structured formats.

Compatibility is a cornerstone of this process. Rather than lock scenarios into a proprietary format, we align them with the industry standards most widely used today:

-

OpenDRIVE for road layouts and lane networks

-

OpenSCENARIO for actor trajectories and interactions

-

CommonRoad and INTERACTION for research-grade scenario exchange

This standards-first approach ensures that the scenarios can be used directly in simulators such as CARLA and esmini, and that customers can integrate them into existing V&V workflows without modification.

Ensuring Fidelity and Quality

Generating scenarios automatically is only half the story. To be useful, they must also meet strict quality requirements. After reconstruction, every scenario goes through a semi-automated quality assessment, where trajectories, lane adherence, and inter-vehicle distances are verified.

When inconsistencies are found, such as abrupt motion or misaligned lane placement, refinement tools and expert review make targeted adjustments. This combination of automation and human oversight keeps the outputs realistic and reliable for simulation.

Challenges on the Road Ahead

No pipeline in this space is without its challenges, and the industry as a whole is still working through some of them. Complex road geometries such as intersections, roundabouts, and unusual merges remain difficult to reconstruct with perfect accuracy. Similarly, missing or faded lane markings and fluctuating depth values can introduce errors in localization.

These are well-known technical hurdles in automated scenario generation, and they are active areas of improvement. Our roadmap includes enhancements to lane-level localization, better handling of unstructured road environments, and upgrades that improve depth consistency and trajectory smoothness.

Each iteration brings more stability and better coverage, which helps expand the range of scenarios that can be generated automatically.

Capsul3 FOUNDATION and SCENARIO: Two Complementary Paths

The reason we invest so heavily in this pipeline is that it supports two distinct but complementary products:

-

Capsul3 FOUNDATION focuses on training. It delivers large-scale, multi-modal driving data enriched with tags that highlight unusual or safety-critical moments. By offering synchronized video, GPS, and IMU data with contextual annotations, it ensures developers can build and refine AI systems on high-quality, diverse raw data.

-

Capsul3 SCENARIO focuses on validation. It takes the same real-world moments and reconstructs them into simulation-ready scenarios formatted to industry standards. These scenarios are designed to slot directly into simulators and verification frameworks, enabling teams to test and validate system behavior under realistic conditions.

Together, they create a closed loop: learn from tagged real-world data, then test against reconstructed scenarios that mirror the complexity of everyday driving.

Closing Thoughts

Autonomous driving will only reach maturity when real-world complexity is fully reflected in both training and validation. At MATT3R, we are building that bridge by turning everyday drives into structured, usable knowledge.

Through real-world data collection using K3Y, semi-automated reconstruction, and adherence to global standards, we are creating a living library of scenarios that grows richer with every journey.

The road ahead involves scaling these capabilities further, addressing complex road geometries, refining localization, and continuously improving fidelity. Each improvement strengthens the tools available to developers and brings the industry closer to safer, more reliable autonomy.

Share:

IAA MOBILITY 2025: Where Autonomy Gets Real

Smarter Dashcam, Smarter Driving: Why K3Y Changes the Game